The last year and a half was a whirlwind, and so I never got around to blogging about the fruits of my dissertation: Mandibular growth in Australopithecus robustus… Sorry! So this post will be the first installment of my description of the outcome of the project. The A. robustus age-series of jaws allowed me to address three questions: [1] Can we statistically analyze patterns of size change in a fossil hominid; [2] how ancient is the human pattern of subadult growth, a key aspect of our life history; and [3] how does postnatal growth contribute to anatomical differences between species? This post will look at question [1] and the “zeta test,” new method I devised to answer it.

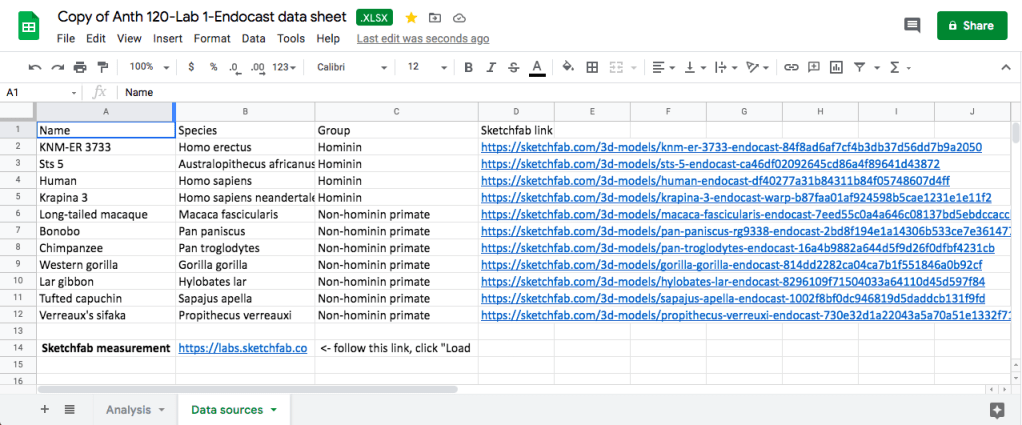

Over a year ago, and exactly one year ago, I described some of the rational for my dissertation. Basically, in order to address questions [2-3] above, I had to come up with a way to analyze age-related variation in a fossil sample. A dismal fossil record means that fossil samples are small and specimens fragmentary – not ideal for statistical analysis. The A. robustus mandibular series, however, contains a number of individuals across ontogeny – more ideal than other samples. Still, though, some specimens are rather complete while most are fairly fragmentary, meaning it is impossible to make all the same observations (i.e. take the same measurements) on each individual. How can growth be understood in the face of these challenges to sample size and homology?

Because traditional parametric statistics – basically growth curves – are ill-suited for fossil samples, I devised a new technique based on resampling statistics. This method, which I ended up calling the “zeta test,” rephrases the question of growth, from a descriptive to a comparative standpoint: is the amount of age-related size change (growth) in the small fossil sample likely to be found in a larger comparative sample? Because pairs of specimens are likelier to share traits in common than an entire ontogenetic series, the zeta test randomly grabs pairs of differently-aged specimens from one sample, then two similarly aged specimens from the second sample, and compares the 2 samples’ size change based only on the traits those two pairs share (see subsequent posts). Pairwise comparisons maximize the number of subadults that can be compared, and further address the problem of homology. Then you repeat this random selection process a bajillion times, and you’ve got a distribution of test statistics describing how the two samples differ in size change between different ages. Here’s a schematic:

|

| 1. Randomly grab a fossil (A) and a human (B) in one dental stage (‘younger’), then a fossil and a human in a different dental stage (‘older’). 2. Using only traits they all share, calculate relative size change in each species (older/younger): the zeta test statistic describes the difference in size change between species. 3. Calculate as many zetas as you can, creating a distribution giving an idea of how similar/different species’ growth is. |

The zeta statistic is the absolute difference between two ratios – so positive values mean species A grew more than species B, while negative values mean the opposite. If 0 (zero, no difference) is within the great majority of resampled statistics, you cannot reject the hypothesis that the two species follow the same pattern of growth. During each resampling, the procedure records the identity and age of each specimen, as well as the number of traits they share in common. This allows patterns of similarity and difference to be explored in more detail. It also makes the program run for a very long time. I wrote the program for the zeta test in the statistical computing language, R, and the codes are freely available. (actually these are from April, and at my University of Michigan website; until we get the Nazarbayev University webpage up and running, you can email me for the updated codes)

The zeta test itself is new, but it’s based on/influenced by other techniques: using resampling to compare samples with missing data was inspired by Gordon et al. (2008). The calculation of ‘growth’ in one sample, and the comparison between samples, is very similar to as Euclidean Distance Matrix Analysis (EDMA), devised in the 1990s by Subhash Lele and Joan Richtsmeier (e.g. Richtsmeier and Lele, 1993). But since this was a new method, I was glad to be able to show that it works!

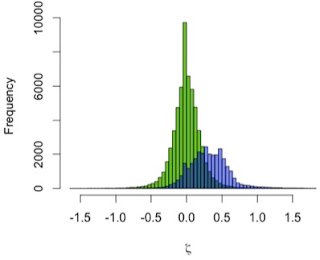

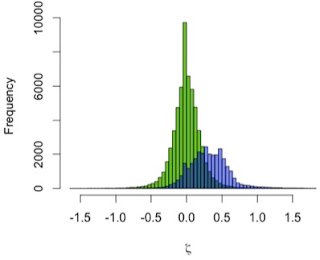

I used the zeta test to compare mandibular growth in a sample of 13 A. robustus and 122 recent humans. I first showed that the method behaves as expected by using it to compare the human sample with itself, resampling 2 pairs of humans rather than a pair of humans and a pair of A. robustus. The green distribution in the graph to the left shows zeta statistics for all possible pairwise comparisons of humans. Just as expected, that it’s strongly centered at zero: only one pattern of growth should be detected in a single sample. (Note, however, the range of variation in the green zetas, the result of individual variation in a cross-sectional sample)

In blue, the human-A. robustus statistics show a markedly different distribution. They are shifted to the right – positive values – indicating that for a given comparison between pairs of specimens, A. robustus increases size more than humans do on average.

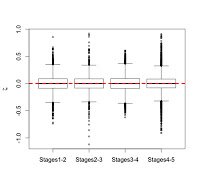

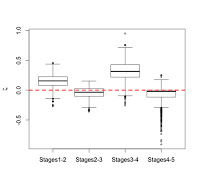

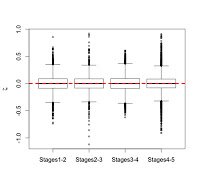

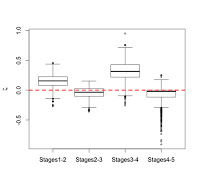

We can also examine how zeta statistics are distributed between different age groups (above). I had broken my sample into five age groups based on stage of dental eruption – the plots above show the distribution of zeta statistics between subsequent eruption stages, the human-only comparison on the left and the human-A. robustus comparison on the right. As expected, the human-only statistics center around zero (red dashed line) across ontogeny, while the human-A. robustus statistics deviate from zero markedly between dental stages 1-2 and 3-4. I’ll explain the significance of this in the next post. What’s important here is that the zeta test seems to be working – it fails to detect a difference when there isn’t one (human-only comparisons). Even better, it detects a difference between humans and A. robustus, which makes sense when you look at the fossils, but had never been demonstrated before.

So there you go, a new statistical method for assessing fossil samples. The next two installments will discuss the results of the zeta test for overall size (important for life history), and for individual traits (measurements; important for evolutionary developmental biology). Stay tuned!

Several years ago, when I first became interested in growth and development, I changed this blog’s header to show this species’ subadults jaws – it was only last year that I realized this would become the focus of my graduate career.

Several years ago, when I first became interested in growth and development, I changed this blog’s header to show this species’ subadults jaws – it was only last year that I realized this would become the focus of my graduate career.

References

Gordon AD, Green DJ, & Richmond BG (2008). Strong postcranial size dimorphism in Australopithecus afarensis: results from two new resampling methods for multivariate data sets with missing data. American journal of physical anthropology, 135 (3), 311-28 PMID: 18044693

Richtsmeier JT, & Lele S (1993). A coordinate-free approach to the analysis of growth patterns: models and theoretical considerations. Biological Reviews, 68 (3), 381-411 PMID: 8347767